Giving Composers a Fighting Chance in the Era of AI

by David Fuentes, Ph.D.

Thirty-five years ago, I was looking for a better way to teach melody when I stumbled on an approach I’ve never seen anywhere else. The results were striking. My students’ melodies not only showed stronger tonal and rhythmic coherence compared to those produced by traditional methods, their lines had drive, shape, and soul. In this proposal, you’ll see how this unique approach is perfectly suited to build the exact sort of AI assistant that composers need at this exact moment in time.

Why? Because today, architects, writers, and even surgeons are pressing developers for human-in-the-loop systems: models that enhance human judgment—not override it. The consensus is clear: the best results come when an AI helps shape and explore human ideas—not when it generates complete works all by itself.

However, for reasons that will soon become apparent, developing a truly collaborative AI assistant for composers brings challenges no other field has had to tackle—deeply intuitive, pattern-driven problems that resist standard computation.

Until now.

WHAT IS AICA?

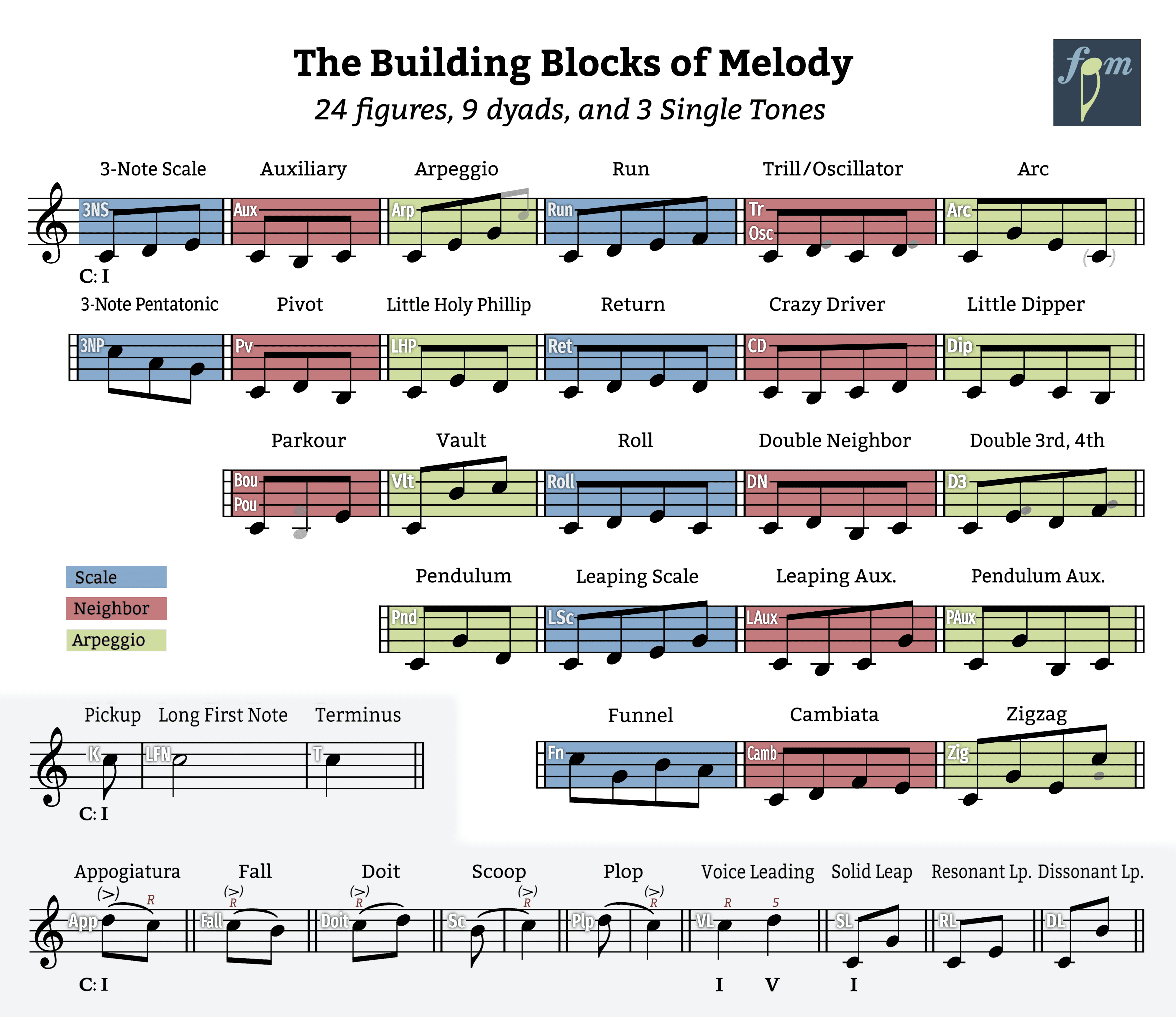

AICA (ī′kŭh), short for “AI Composers’ Assistant,” is a proposed knowledge-based system built on Melodic Figuration Theory (MFT)—a new, four-tiered approach to understanding and writing melody. MFT is fundamentally a theory of observable melodic behavior. It identifies a limited set of 36 common, small-scale patterns—“melodic figures”—that composers have consistently used across tonal styles and genres. By codifying the specific ways these figures behave across key dimensions including harmony, meter, register, and more, MFT captures the consistent, intuitive ways that composers shape and refine the basic building blocks of melody to create tangible, expressive effects.

Current music AI platforms use large language model (LLM)-based systems, which rely on vast datasets and probability to predict the next note. Not AICA. Her strength lies in truly understanding how melody works: the many nuanced, multi-faceted compositional strategies that explain why one figure follows another. This musical agility gives AICA insight into how notes form melodic figures, how melodic figures form melodic gestures, how melodic gestures interact to form compelling phrases, and how composers create flow, variety, surprise, and expressive payoff at precise points within a song or symphony.

AICA will be available either as a stand-alone plugin or integrated into a leading notation platform such as Dorico, Sibelius, or MuseScore.

THE OUTLINE OF THIS PROPOSAL

PART 1 – Five Challenges for AI Music Models.

PART 2 – How Two AI Music Models Attempt Collaboration.

PART 3 – What Composers Actually Need From an AI Tool.

PART 4 –Why Melodic Figuration Theory Will Build The Best Possible AI for Composers.

PART 5 – How AICA Offers a Satisfying Challenge to Developers.

PART ONE: Five Challenges for Current AI Music Models

A few decades ago, when MIDI and music sampling first threatened to replace live musicians, nobody predicted that composers would one day face their own existential reckoning. But here we are. Today, tools like Suno spit out polished songs (lyrics, vocals, backing tracks) from a single text prompt. The prompts—“feel-good acoustic,” “corporate motivational,” “lo-fi funk”—are the very terms clients once used to license tracks from Epidemic Sound, Musicbed, and PremiumBeat. What’s being replaced isn’t artistic vision, but a niche of commercial music production where “good enough” has always mattered more than greatness.

Meanwhile, across the street, classically trained composers eye tools like Google’s Magenta and OpenAI’s MuseNet—or China’s recent NotaGen, a Transformer-based model out of Beijing’s Central Conservatory that’s already sparking debate. These systems revolutionized AI’s grasp of musical structure. Unlike early RNNs (which “forgot” notes after a few bars), Transformers handle long-form coherence. But handling isn’t the same as understanding—and this gap is just the tip of the iceberg. Despite their advances, today’s AI tools still face five critical hurdles that reveal why human composers are hardly obsolete; in fact, we’re the single missing component AI can’t replicate.

1. Coherence.

As I just mentioned, Transformer models offered a way forward. Their attention mechanism lets them take in an entire phrase, section, or even a full piece, and track how notes relate across time. So unlike earlier iterations, which suffered from musical amnesia, Transformers can generate music with much better long-range coherence. But “better” doesn’t mean solved.

While Transformers can learn longer dependencies, they still often do so probabilistically, without the kind of organic, narrative understanding a human composer possesses—an understanding steeped in an intuitive sense of timing, emotion, and, most crucially: relevance.

2. Relevance.

Here’s what I mean by relevance: Composers don’t just shape musical sounds into intriguing gestures and phrases; those shapes, when combined just right, embody our sense of how things are or, even more profoundly, how they ought to be in our world. And when our fellow humans hear what we compose, they’re not just hearing acoustic patterns; they catch what we’ve captured in sound and resonate with it. This incredibly deep mode of communication illuminates why music remains one of the most profoundly human things we do, echoing across every culture and era.

3. Development.

Ask three musicians to define melodic development, and you’ll get at least four answers and several heated arguments. That’s why it’s so surprising to find the clearest definition on Wikipedia: “Development is the process by which a musical idea is restated and transformed during the course of a composition.” The key word is transformed. Restatement alone is repetition; development requires reinvention.

Demonstration #1 (language)

If I say, “Development restates and transforms ideas,” and you reply:

- “Development both restates and transforms ideas,” you’ve added nuance.

- “Development never restates ideas,” you’ve inverted the meaning.

- “D-R-T-I” (acronym), you’ve abstracted it.

- “Transforms ideas, transforms ideas, transforms ideas, transforms ideas,” you’ve built a poor excuse for an advertising jingle.

In music, all four of these versions would qualify as development. And no doubt you or I could come up with a dozen more without breaking a sweat. So the challenge for the composer isn’t whether an idea can be transformed, but which transformations—when combined in just the right way—feel intentional, rewarding, and human.

Demonstration #2 (music)

Let’s listen to two classic “musical sentences”—Beethoven’s 5th and “Happy Birthday”—where a melodic idea is restated (developed) with a few necessary melodic alterations, made necessary because the harmony changes at the response.

Two famous musical sentences

This standard sentence template has produced countless brilliant melodies. In other words, there’s nothing “wrong” with using a formula that works, until it doesn’t. (And we’re about to encounter a melody that doesn’t.)

Now listen to Mozart’s “Eine Kleine Nachtmusik.” Same sentence structure, but with “extra” alterations.

“Eine Kleine Nachtmusik,” by Wolfgang Amadeus Mozart

Mozart’s response shouldn’t work. He changes every single note, warping the melody into what almost sounds like an inversion (but isn’t). Yet it’s brilliant—playful, inevitable, alive. Now hear what happens when we force Mozart’s melody to undergo only the kind of safe, minimal tweaks an AI is likely to default to.

It’s a disaster: Clunky. Robotic. Wrong. But what if the AI, trained on vast datasets, picked up on the fact that approximately 1% of musical sentences invert the response? We’d get this.

Still awful: mathematically plausible, musically nonsensical. Here’s the truth: AI has no clue why Mozart’s version soars while the “more correct” versions crash. It can’t hear the difference between calculated risk and careless mutation because melodic development isn’t about rules. It’s about “fit,” sensitivity, delight.

True, such qualities are all but impossible to model, so AI programmers add a randomness knob called temperature. Turn it up, and the results get wilder; turn it down, and they stay closer to the expected formulas. But no matter the setting, the AI still doesn’t know why one variation hits and another flops. It’s gambling, not composing.

Human composers don’t just alter ideas; we interrogate them: “Does this twist deepen the narrative?” “Does that note earn its surprise?” And without the human ear, AI’s music is a coin toss: sometimes passable, often laughable, never meaningful.

For the reasons I just raised, melodic development is a much more serious problem for musical AI than long-range coherence.

4. The black box.

At the heart of today’s most powerful AI models sits a striking contradiction: they work astonishingly well, yet even their own developers can’t explain how a given output came into being. This makes true collaboration impossible. If the composer doesn’t like a suggestion, it’s hard to tell the AI why in a way it understands. Instead of being able to guide it towards a more suitable alternative based on musical logic, the composer’s “interaction” consists of prompt-and-generate, modifying the prompt, adjusting temperature, or simply regenerating and hoping for something closer to the desired outcome the second or seventeenth time around.

5. Lifting.

Lifting occurs when AI models reproduce near-identical snippets of copyrighted music—not as inspiration, but as algorithmic plagiarism. Unlike human composers who create something new from influences we intuitively internalize, AI often stitches together phrase fragments from its training data, bypassing homage for theft.

This is hardly rare. Models like Udio and Suno currently face lawsuits for generating outputs that mirror artists’ vocals, melodies, or production styles note-for-note. The issue isn’t just legality; it’s artistic. When an AI regurgitates a Beatles lick, a Beyoncé vocal run, or a chromatic sequence by Bach, it’s not melodic development, it’s photocopying.

The result? A lose-lose: Creators risk litigation, while audiences get music stripped of originality—a collage of unlicensed parts disguised as innovation. For human collaborators, this undermines trust. How can we build new art when the tool’s “ideas” might be stolen goods?

These five hurdles—coherence, relevance, development, the inscrutable black box, and lifting—paint a bleak picture. But a few developers are hacking the lock. What if AI didn’t replace us… but amplified us? Part Two unveils two models betting yes.

PART TWO: Two AI Music Models that Attempt Collaboration

MODEL #1: THE ANTICIPATORY MUSIC TRANSFORMER: A QUEST FOR COHERENCE

The Anticipatory Music Transformer (AMT) isn’t just another AI composer—it’s a bold attempt to fix what most AI music tools get wrong: they handle local patterns well but fall apart over longer spans. Built on the same Transformer architecture behind ChatGPT, AMT differs by ingesting symbolic data (MIDI) instead of raw audio or text.

1. The developers identify four critical flaws to fix in existing AI music.

- Meandering structures (lack of long-range coherence),

- Aimless note sequences (no goal-directedness),

- Arbitrary variations (unmotivated by musical logic),

- Rigid global control (inability to steer large-scale form).

This ambitious to-do list springs from a fundamental insight: Human composers don’t write note-by-note—they shape trajectories. Thus, AMT’s core innovation becomes anticipation: a technical echo of artistic foresight.

2. The Anticipation Mechanism: How AMT Tries to Think Ahead.

Unlike standard models that predict only the next note, AMT mimics human planning through a three-step process:

- Predict: Forecast the music’s state k measures ahead (“What will happen here?”),

- Embed: Encode this future into “anticipatory embeddings” —rich, mathematical representations of the predicted future musical context.

- Guide: Embeddings of the anticipated future are fed back to guide the generation of the current musical passage. Knowing where the music is headed allows the model to make more musically coherent and goal-directed choices in the present.

Why this magic trick doesn’t land. At its core, AMT is still pattern-matching—it’s just matching patterns within a larger context. The developers assume that mimicking a composer’s foresight is the key, but they mistake the mechanism for the meaning.

In actual music, composers build anticipation not by seeding fragments of the destination, but by creating a sense of forward momentum and unresolved tension that makes the arrival of the new section feel both surprising and inevitable. A transition’s job is to ask a question, not to leak the answer.

Picture a mountain climber. Every ten feet, she’s shown a panoramic photo of the summit. When she arrives, she feels neither triumph nor elation. In a similar way, AMT spoils anticipation by front-loading the payoff.

This approach doesn’t just dull transitions; it hobbles the model’s ability to address its own stated goals. How can AMT achieve short term “goal-directedness” if its a goal is simply to arrive at a pre-determined set of notes? How can it produce “motivated variations” if the motivation is a mathematical connection to a future state rather than an expressive need in the present moment? And what about development?

AMT doesn’t mimic artistic foresight; it automates a paint-by-numbers schematic. It’s a design born from overthinking the data and under-experiencing the art form it seeks to emulate.

3. AMT and collaboration.

AMT’s workflow feels collaborative:

- You start, it finishes.

- You frame, it fills.

- You set the anticipation window.

- You and AMT volley back and forth until you feel the music is done.

But here’s the catch: when the AMT’s response isn’t quite right, you can’t ask why. You can’t question its logic or discuss alternatives. Its decisions are buried in statistical weights.

You and AMT can’t reflect, argue, or adjust based on shared principles. The interaction is one-way: prompt, generate, react. It’s fast, polished, and sometimes useful—but not truly creative. You’re working with a black box, not a sparring partner.

AMT is certainly a leap forward. But its defining concept—anticipation—misses the point of musical drama. Its workflow blocks real dialogue. And its creative core still relies on historical patterns, risking both cliché and unintentional mimicry (lifting).

Summing up, AMT is a beautifully engineered solution to the wrong problem. It perfects the art of looking ahead, but it can’t help a composer understand the ground beneath their feet. This raises a crucial question: what is the right problem?

MODEL #2: AIMÉE AND THE KNOWLEDGE-BASED PROMISE

If a data-driven model like AMT stumbles because it can’t grasp musical logic, the obvious fix is to build an AI that doesn’t guess—one that knows. Enter Aimée, a composer’s assistant from the Netherlands that abandons big data in favor of a different promise: the knowledge-based expert system.

1. The Flaws Aimée Aims to Fix

Aimée was designed to escape the trap of “plausible but meaningless” output by grounding itself in verified musical knowledge. Instead of learning from statistical patterns, it’s explicitly programmed with the rules of theory—harmony, rhythm, and form. The goal: to replace probability with principle.

2. The Core Mechanism: Survival of the Fittest Theory

Aimée fuses two classic AI tools:

- An expert system, which stores the rules of traditional theory.

- A genetic algorithm, which generates musical ideas, filters them through a fitness test, and evolves them over generations.

The result is music that gradually conforms to theoretical correctness. On paper, it’s an elegant, scientific path to composition. So what went wrong?

3. The problem with training on traditional music theory.

Imagine a world where cakes exist, but recipes don’t. A few gifted bakers create light, luscious layers and flawless, fluffy frosting, yet they can’t explain how. So some scholars swoop in and catalogue flour-to-fat ratios, diagram starch lattices, and formulate the necessary conditions for sugar crystallization. They turn these findings into cookbooks, and their students dutifully bake flavorless pucks that no amount of icing can save.

That’s what happens when we teach melody writing using traditional theory—something never intended as a method. We give students a lab report instead of a recipe. But it wasn’t always this way.

Early theorists speculated with flour still on their hands. Gioseffo Zarlino’s The Institutions of Harmony (1558) describes the quality and best applications of intervals the way a chef does with spices. Johann Joseph Fux’s Species Counterpoint (1725)—a text still on desks today—walks students through step-by-step melodic drills, each designed to teach a single skill. For them, theory and practice were part of the same conversation.

But eventually, Enlightenment ideals shifted the focus from showing how to explaining why. By the 19th century, theory had become a detached commentary—less about making music than dissecting it.

Today, that gap has only widened. Students who follow traditional theory’s rules write melodies that earn A’s—but are instantly forgettable. Still, it’s not their fault; nor their teachers. Long ago, musicians “in the know” thought it feasible to adapt the rules of analysis into a method for writing melody.

4. A Flawed Collaboration.

Is basing an AI on rules derived from overarching principles rather than melodic behavior a foundational error when the goal is collaboration? In other words, do composers respond to the basic elements of music in terms of theoretical premises or hands-in-the-dirt practicality?

Give Aimée a melody, and she’ll harmonize and arrange it, rule by rule. But it’s can’t be a conversation between like-minded creators. It’s like co-writing a poem with a dictionary.

Ask Aimée to achieve more registral contrast for example, and she’ll draw a blank. Why? Because traditional theory says nothing about how to shape register. The result: a rule-following assistant that generates technically sound but emotionally empty music.

Aimée shows why escaping the black box is not enough. A transparent system is useless if the knowledge it holds was never intended for creating music—but rather for analyzing it. Which leaves us with the most important question of all: What if we could build an AI around practical behavior rather than analytic principles?

PART THREE: Now Hiring: The Composer’s Ideal Assistant

In this short section, we imagine what composers actually need by drafting a job description for a truly helpful, truly collaborative assistant.

Job Title: Assistant to a Human Composer

Position Type: Intuitive, responsive, non-authoritarian

Start Date: Yesterday

Minimum Qualifications:

- Be fluent in musical terminology—enough to know what the composer likely means even if she lacks the specific vocabulary to express it.

- Make suggestions grounded in how composers actually shape the musical surface—not in theoretical systems designed more for analysis than creation.

- Have a broad and deep understanding of melodic development techniques.

- Recognize which aspects of the composer’s music to repeat, vary, or contrast as his music unfolds. If asked, be able to describe the effect these operations are likely to achieve.

- Recognize recurring ideas—even if transformed by transposition, inversion, variation, fragmentation, reordering, etc.

- Be able to suggest specific ways to add tension, contrast, or expressive weight at specific moments.

- Be fluent in harmony, but not bossy about it.

- Be a calm, steady presence as the composer revises, reimagines, and wanders.

- Always defer to the composer’s ear, instinct, and vision.

Bonus if you:

- Can suggest an option overlooked by the composer and explain what makes it worth auditioning.

- Can help a composer get unstuck—not by guessing what they want, but by offering alternatives.

- Begin to acquire a sense for the composer’s preferences and “go-to moves” such that you can: (1) make suggestions resonant with her “voice,” and (2) at just the right moments—urge her to try something new.

Why the right knowledge matters. An applicant with these qualifications would be indispensable. But building such a system is impossible without a revolutionary kind of knowledge at its core. It requires a system built not on lifting patterns from finished works, but on observable ways that music behaves. And this requires a new theory for a new time.

PART FOUR: Building Aica’s Toolkit

As mentioned at the beginning of this proposal, is a proposed knowledge-based system built on Melodic Figuration Theory (MFT)—a groundbreaking, four-tiered approach to understanding and writing melody. By identifying a finite set of 36 melodic figures—kinetic, semantic building blocks that recur across eras and styles—MFT provides a tangible grammar for melody. Its figures aren’t abstract constructs but the very chunks our brains use to process and create melody, much as chords are integral to harmonic thinking. While chords have given us a concrete yet pliable object for precise observation of harmonic practice, we’ve long lacked anything comparable in melody. That gap is precisely what melodic figures fill, allowing us to compare one musical situation with another and, for the first time, assemble a corpus that marks the difference between normal and special melodic behaviors. The fact that this vocabulary was never formally identified or taught, yet appears consistently across centuries and styles, suggests that composers must have drawn on it intuitively to achieve the remarkably similar expressive effects we can now document. And most relevant here, the composer’s intuitive decisions are not only observable, but programmable.

Current AI music models, however sophisticated, falter in predictable ways: their long-range coherence is fragile, their variations sound arbitrary, their “development” is mechanical, their reasoning opaque, and their outputs sometimes lift wholesale from existing works. By contrast, AICA is built on MFT’s four tiers of melody. Tier 1 (Melodic Vocabulary) arms AICA with the ability to identify and manipulate these fundamental melodic figures. Tier 2 (Five Dimensions of Melodic Behavior) provides insight into how these figures behave across harmony, meter, register, trajectory, and contour. This allows AICA to understand subtle compositional strategies, such as pinpointing ideal spots for harmonic tension, adjusting metric placement for expressive impact, and shaping the flow and emphasis of a phrase. By codifying these behaviors, AICA can suggest solutions that resonate with a composer’s intent, offering genuine musical intelligence rather than just “good enough” output.

Finally, Tier 3 (Melodic Syntax) and Tier 4 (Melodic Schemas) enable AICA to understand how melodic figures combine to form gestures, phrases, and larger musical structures. This means AICA can offer specific, musically meaningful strategies for melodic development—repeating, varying, or contrasting ideas in ways that feel intentional and rewarding. Instead of a black box that provides inexplicable suggestions, AICA works with a defined body of knowledge that composers themselves have relied on for centuries.

The following applications are drawn from A Brief Introduction to Melodic Figuration Theory.

Tier 1: The Vocabulary of Melody

Melodic Figuration Theory proposes that every tonal melody is built from a shared, finite vocabulary of twenty-four 3–4-note figures, nine dyads, and three single-tone options. Each of these building blocks bears a distinct melodic fingerprint—so even when it’s transposed, inverted, or rhythmically reshaped, we can still recognize it. Arming AICA with this deep “vocabulary” lets her recognize exactly what the composer presents, rework that with precision, and offer musically coherent alternatives.

Tier 2: Five Dimensions of Melodic Behavior

[1] Harmony. Melodic Figuration Theory holds that each melodic figure defines its own harmonic structure: taken together, the way each note moves to the next reveals which tones normally behave as chord tones and non-chord tones. This lets AICA identify likely chord tones in real time—even when no chords are playing. In turn, she can offer straightforward harmonizations, suggest inventive reharmonizations, pinpoint ideal spots for harmonic tension, and even recognize moments where a melody might benefit from deliberate harmonic contradiction.

[2] Metric placement. By showing how a figure’s position within the metric cycle affects its expressive impact, Melodic Figuration Theory gives AICA a power other AI composers don’t even consider: adjusting metric placement to better match a composer’s intent—whether it’s to make a figure fly, flip, flounder, or bellyflop. And when the composer isn’t sure what she wants, AICA can help her audition figures in multiple alignments, offering a real-time sense of what different kinds of momentum might imply.

[3] Trajectory. Because every figure has its own gait—some stretch, some squat, some leap, others glide—AICA can help the composer shape every touchpoint and pathway within a phrase, controlling pacing, flow, momentum, and emphasis. Each trajectory leaves a distinct musical imprint. And because AICA knows how the precise timing of a leap can make the difference between a seamless link and an accented one, she can help composers explore what could be before committing to what should be.

[4] Register. Considering how strongly register shapes both musical feeling and musical design, it’s astonishing how little serious attention it’s received. But because Melodic Figuration Theory brings a new awareness of melodic figures—especially how behave as they negotiate registral space—AICA can now lay out clear ways to use registral slope and span to isolate melodic strata, bridge registral zones, and set up then exceed registral borders. The result? Melodic decisions that formerly felt like guesswork become clear, controllable, and packed with expressive force.

[5] Contour. Melodic Figuration Theory enables AICA to grasp the key factors that shape the three layers of melodic contour—macro, mezzo, and micro motion—and do so both separately and in tandem. Whether the composer wants to echo a gesture’s shape, push against it for contrast, exaggerate it for emotional force, or embed it subtly (subliminally) across an entire passage, AICA’s deep knowledge of each figure’s behavioral traits will allow her to suggest musical options that inspire the composer’s imagination.

Tier 3: Melodic Syntax

Every melody begins with a proposition—a gesture—the smallest intact unit of melody. To build a gesture, the composer or AICA combines a melodic figure with rhythm; the process can go in either direction. Because rhythm and meter are so integral to MFT,

Melodic syntax focuses on ways each gesture naturally triggers a response, giving melodies their forward logic. And because Part One equipped us with a truly multidimensional understanding of the melodic behavior—insights into harmony, metric placement, trajectory, register, and contour—AICA is fully equipped to offer (at least) 25 strategies to repeat, 25 to vary, and 25 to contrast any melodic proposition: all in engaging, musically meaningful ways.

Tier 4: Melodic Schemas

Schemas aren’t rigid templates but shared reference points—cognitive shortcuts composers use to navigate creativity within familiar frameworks. AICA’s deep knowledge of each figure’s characteristic behaviors gives her exceptional agility in working with every known schema—from classical forms and jazz turnarounds to country licks and rock riffs. She can also leverage MFT’s five dimensions (harmony, metric placement, trajectory, register, contour) to define brand-new schemas—whether based on registral shifts, metric quirks, contour arcs, or any other melodic dimension. And when it comes to building phrases, AICA can draw on both the well-known, flexible phrase schemas and the tighter, MFT-driven schematic constraints (distinct syntactical behaviors) that paradoxically set the composer’s imagination—and the music—free.

PART FIVE: Developer Appeal

In Part Three, we posted a job description for the ideal AI collaborator, outlining four critical qualifications. This raises the obvious question: Is there a candidate that can actually meet these demanding standards?

There is! AICA can, because it’s built on a revolutionary foundation: Melodic Figuration Theory.

For potential partners and developers, this project isn’t just another music AI. It is an opportunity to pioneer a new class of creative tools by tackling three distinct and rewarding challenges.

1. An Invitation to Build True Musical Intelligence

Most AI music is a technical marvel of pattern-matching, a black box that operates on probability. AICA offers a more ambitious challenge: to build a system with an explainable, elegant internal logic. The goal is to move beyond mere syntax—the statistical likelihood of the next note—and engage with the semantics and pragmatics of melody. Developers won’t be training a model to imitate; they will be codifying a system that understands compositional intent. This is the chance to build not just a pattern-matcher, but a partner capable of strategic, musical reasoning.

2. The Tool Artists Are Actually Asking For

The market is saturated with tools that aim to automate creative work out of existence. Yet, as we’ve seen, serious artists are calling for the opposite: tools that augment their skills and enhance their creative process. AICA is designed from the ground up to meet this demand. It is not a product generator; it is a process enhancer. It is for the composer who wants to keep their hands in the dirt and feel the satisfaction of crafting something themselves. By empowering the user at every step, AICA becomes an indispensable assistant—the kind of tool that users don’t just tolerate, but love.

3. AICA is Ethical, Educational, and Essential

In an era defined by lawsuits over data scraping and algorithmic plagiarism, AICA offers the ethical high ground. It requires no opaque training data and runs no risk of “lifting” copyrighted material, because it doesn’t learn from a dataset of finished works. Its knowledge is derived from the foundational principles of melodic behavior. This “clean” approach makes AICA a responsible, legally sound, and genuinely original system.

This foundation does more than mitigate risk; it unlocks a profound secondary function. Interacting with AICA becomes a learning experience in itself. As users see MFT’s concepts applied to their own ideas, they don’t just get better music—they become better composers. This transforms AICA from a simple utility into a lifelong pedagogical partner, opening a vast and untapped market for a tool that can both create and cultivate.